The EU’s AI Policy

This blog post is a continuation of my ongoing series on AI. I’ve covered a few things already, ranging from basic definitions to certain models’ pitfalls to ethical considerations. Check those out as well here.

The EU has just voted to implement the most comprehensive AI policy to date.

What Is the AI Act?

The Artificial Intelligence Act is specifically focused on “human-centric AI” and aims to regulate any product or service that uses the technology.

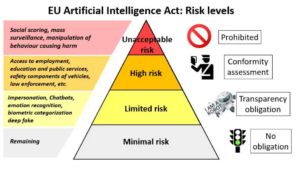

The AI act classifies systems or applications based on their potential risk to society: from “low to limited to high, up to unacceptable”. The treatment for each category varies, from no requirements or regulation at the low end to being banned altogether in the EU at the unacceptable end.

According to Teri Schultz from NPR, “At the low-risk end are applications such as AI in video games or spam filters… And at the unacceptable end are uses such as biometric identification, which will be prohibited in public spaces, except in special cases, like law enforcement or counterterrorism.”

Can’t say I’m super in love with the idea of law enforcement getting special privileges for biometric identification, considering their use of it is one of the chief ethical concerns when it comes to AI, but I guess I’ll take what we can get.

Implementation of the AI Act

There are of course qualifiers along the way. Individual countries are responsible for evaluating and enforcing the act, but the EU has established an office in Brussels to help coordinate and ensure regulation is done fairly. The body will also provide guidance for companies and developers so they’re in compliance.

Of course, those companies lobbied heavily against the legislation. I suppose I can at least take joy in the schadenfreude of their absolute L.

Future Considerations–What it Means For Us

The Act has not fully passed yet–it’s still waiting for final approval, which should come in the next couple months. After that, its provisions will gradually be implemented over the next two years.

The AI Act is not perfect–legislation rarely is. But it is by far the best effort to date, and we could stand to learn something from it. If nothing else, we should put specific, intentional effort into studying the results of the Act and analyzing its successes and failures.

The bottom line is that, as with anything else, we must listen to experts. This Act is guided by their knowledge, and that’s precisely why it’s the best effort to date.

I for one am excited to see how this pans out. I do worry that it will be too little too late and by the time it’s in place it will already be obsolete, as is often the concern with regulating technology, but it’s a start. I can live with that for now.