What Are AI Hallucinations?

What are AI hallucinations, and why do they matter?

“Hallucination” is a fancy word, but you can think of it as whenever an AI model spits back inaccurate information.

AI Hallucinations in Practice

A good example of an AI hallucination comes from ChatGPT. For a time–and this has been corrected–if you asked it what the capital of Australia is, it would tell you it was Sydney. (It’s Canberra, btw, and yes, I did have to look that up–but I didn’t use Chat GPT).

It said the capital is Sydney because a lot of the data it was trained on thought that was correct. Since it has no real way of verifying that other than to consult the database it’s trained on, it doesn’t know that’s wrong. It’s responsible for fact-checking itself, but it already “checked” the facts when it queried the database to provide an answer. It’s a recursive feedback loop where the model itself is the only thing checking its own outputs.

How to Deal with AI Hallucinations

There are some projects that have been proposed as third-party integrations that would help keep these models accurate (SelfCheck, for example), but there’s nothing in place as of yet. That poses a significant risk of disinformation or misinformation being spread–in the first case, consider a model built with intentionally bad information, and in the second, consider a model built not with the intention of spreading disinformation, but still relying upon flawed data and inherent biases that the architects are either not aware of or do not correct for.

For now, we consider most hallucinations to be the result of flawed modeling and not a nefarious plot. Even the architects of modern AI admit that there are flaws built into the models.

So, what can be done? Well, that’s a great question. I’m gonna get into that more in future posts as I explore what ethical AI usage looks like and how regulations can be utilized to ensure we don’t stray too far.

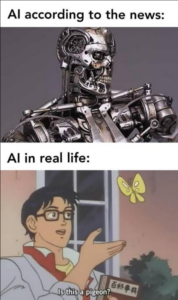

Don’t Sound the Skynet Alarms Yet

AI is powerful and exciting, and it’s not going away. We need to learn to live with it and establish best practices for ethical use to ensure that it can’t be used to spread disinformation and harm. We can do that–we just have to start.

I’m going to keep talking about AI and how it fits into our world over the coming weeks. If you’re interested in learning more, consider following my socials–it’s free, and helps my mission a lot. It also means you’ll be the first to see any educational posts I put up, and you’ll be that much more equipped to navigate our modern world.

Thanks for tuning in. I’ll be back with more soon.